Table of Contents

Database Info and Management:

The data that is stored in XTdb is fairly simple, and always in chronological order which means that it is possible to use flat files for data and indexes which are potentially much faster to access and much less resource intensive than using an SQL database. The combination of indexing and disk caching that XTdb uses is very fast in retrieving data and building reports. If disk corruption does occur it is easy to rebuild both the data files and the indexes from remaining data and filter out any garbage data.

Using the Database Window:

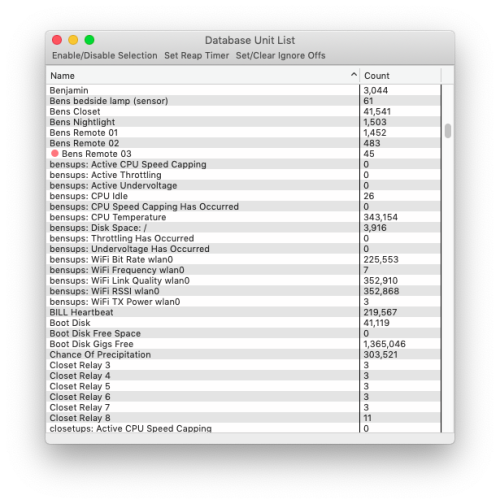

By default a database entry is created for every unit in XTension. You can see this list and run queries against them from the Database window. Open this window by going to the Database window and selecting “Show Database Window”

All the Units are listed here along with the current number of records stored in the database. There are shortcuts in the toolbar for making common changes to multiple selected Units in the list. The following will be applied to all Units selected in the list:

Enable/Disable: If you disable a Unit in XTdb it will no longer save data for that unit but will ignore any updates from XTension. This does not delete the data currently in the database, but if you have a reap timer set the data will eventually roll off. You can also delete the unit and it’s data completely from the Database Unit Detail window for that Unit.

Set Reap Timer: Opens a popup with some common reap timer values. These control how long data is stored for the unit. Data older than the interval you set will be removed every day early in the morning or immediately if you open the Database Unit Detail window and select the “Reap Data Now” button.

Set Clear IgnoreOffs: Sometimes it is not necessary to store the Off events for a unit and by not recording them you can save some disk space and improve query speeds on that Unit. This can be useful for Motion Sensors or other Units where only the On event is of interest.

Units shown with a red marker at the beginning of their name indicate a Unit for which there is an entry in XTdb for, but which no longer exists in XTension. (or if XTension is not running all Units will appear that way) See the section on managing Units no longer in XTension for more info on how to get rid of those.

Database Unit Detail Window:

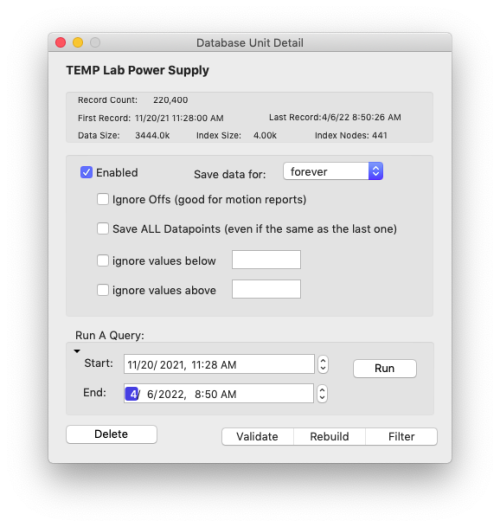

Double click a Unit in the list to open it’s Database Unit Detail window. The top information section shows you some statistics about this Unit in the database. How many records, the dates of the first and last records, the data file size and the index file size as well as the number of index nodes.

Enabled: If data for this unit is being saved to the database or not. This can also be set in the XTdb tab of the Edit Unit dialog in XTension.

Save data for: The reap timer for this Unit or how long to save data before it gets rolled off the back of the file. Popup values are 30, 60 or 90 days, 6 months, 1 year, 5 years or forever. The default for new Units is Forever. This can also be set in the XTdb tab of the Edit Unit dialog in XTension.

Ignore Offs: Does not save Off events if they are of no use in the generating of reports or graphs. For devices like motion sensors only the on events may be useful and so you can ignore the offs.

Save All Datapoints: By default only changes in value are saved to the database for analog devices like temperature sensors or other similar Units. If you wish to save all the updates to a Unit even if it is the same value as the previous one select this.

Ignore Values Above/Below: Some sensors and other devices will sometimes send wildly out of range data instead of a valid error message. This can confuse the auto ranging on graphs or confuse the various Meta Data units. If you know your device is going to occationally read a very out of scope value you can have XTdb ignore it here. You can also filter with the min/max values in XTension or through scripting in XTension if you prefer.

The query section and actions at the bottom of the window are documented in the next sections.

Running A Query:

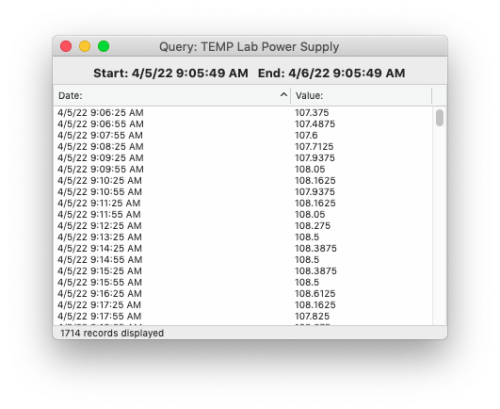

At the bottom of the Unit Detail window is an interface to running a query. By default the start and end fields are filled with the first and last record date and clicking the Run button would result in output for every saved datapoint. This might be very large. You can enter any date range that is of interest to you. The Drop Down triangle to the right of the Start field has some quick selections to set it for the last 24 hours, the last 30 days or to reset to the entire database for that Unit.

The query results window can take some time to populate, the window is complete when the progress indicator disappears and the record count stops incrementing. You can begin to interact with the window before that is complete.

Select Save from the File menu to save the output as a csv, comma separated value, file. This is easy to import into other applications for graphing or analysis.

You can copy lines out as regular text as well.

Deleting Individual Records: By highlighting lines in the output and selecting Delete from the Edit menu you can remove the selected lines from the output. This will cause the data files on disk to be conformed without the selected records. This is useful for removing some bad data that might have crept in while setting up or testing new devices.

It is also possible to run queries via AppleScript, see the AppleScript dictionary section for more info on that.

Delete, Validate, Rebuild, Filter:

At the bottom of the Database Unit Detail window are several buttons for other helpful functions.

Delete:

This will delete all the data saved for a Unit. If the Unit is still in XTension it is not possible to make it not show in the database, this just deletes any data saved so far for the Unit starting it over with a clean slate.

Validate:

If you suspect that data is either missing or not showing up in graphs as expected you can perform a validate of just this database. The Validate function runs inline and so the program may become unresponsive for a time when running on large datasets. The data file is read through from the beginning to verify that it is readable and all dates are validated to be in the proper order. If all goes well you’ll get a popup saying that the data looks OK. If not you can proceed to the Rebuild step next.

Rebuild:

If the data file has become corrupted or has been written out of order in spite of all the checks to prevent that it may be necessary to rebuild the data file and indexs. This button will do both. The data file is opened and loaded entirely into memory, then re-sorted by the dates, re-written and then the index re-created. This can be a time consuming and memory intensive process for large datasets. A background processing window is opened when you click this and the rebuild proceeds in the background. When complete the window will display that message and after a short time it will close. It is not necessary to do this unless you are having trouble with the data and that the Validate step above has returned an error.

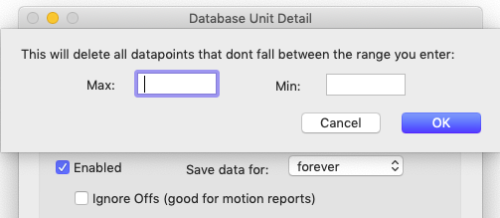

Filter:

The Filter button brings up the inline filter dialog. You can enter a range of numbers that are valid for the data and it will remove any data points where the value is out of range. If you have glitches in readings that are making graphs auto range so far that you can’t see the actual data you can use this to remove them. For example I have some temp sensors that return a value of 195 for an error. Since this is well above the value they are capable of reading they have saved that into the database before I set the min/max filtering. This lets you remove such values from the existing data.

Managing Units No Longer in XTension:

XTdb will not delete a Unit from its database when you delete a Unit in XTension. The data is kept until you specifically delete it. You can do this manually from each Units database detail window or you can use the Manage Units No Longer in XTension window. Any Units that are no longer found in the feed from XTension will be marked in the Database list window with a red marker ahead of their name.

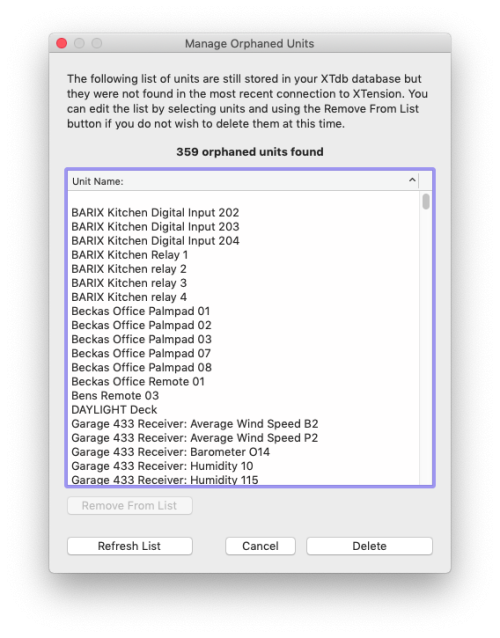

From the Database menu select the “Manage Units No Longer In XTension” menu.

This window contains a list of all “orphaned” Units still in the database but no longer found in the feed from XTension. All data for all Units in the list will be deleted when you click the delete button. NOT just the selected ones in the list. To edit the list you can highlight Units and use the “Remove From List” button to remove them from the list. This does not remove the data, just removes them from the list that will be deleted when you click the Delete button.

If you have deleted more Units while the window was open, or if you have removed items from the list you do wish to delete, you can use the Refresh List button to rebuild the list.

Note that all Units that you haven’t removed from the list are deleted when you press the delete button, NOT just any that you have selected in the list. Remove any Units from the list that you wish to keep the data for before pressing the delete button.

Rebuilding and Reindexing the database:

The Database menu contains commands to verify and to rebuild the entire database. The individual Unit windows also contain buttons to run a verify or a rebuild on that Units data only. These are CPU intensive tasks and the entire rebuild of data is a very memory and time intensive task and should be run only if there is a problem with missing data or something similar.

Rebuild All Indexes:

This command in the Database menu causes all database indexes to be invalidated and rebuilt. It is the fastest of the options to perform maintenance on the database. The current index on disk and in memory is cleared and then the data file is opened and the first record is read. The position into the file is advanced by the index node offset, currently 500 records (so 500 * 16 bytes for each record) and the date is read and added to the index, until it reaches the end of the file. If an index has become corrupt this will solve the problem.

Rebuild All Data:

This is a very cpu and memory intensive task. One mode of corruption for the data is if dates are written out of order into the file. This does not happen in any way that I am currently aware of but there were bugs in previous versions that could cause this to happen in some circumstances. The data has to be in date order in order for the indexing and search routines to work properly otherwise data may not display in graphs properly or at all.

In order not to lose data that may be out of place the entirety of the data file is loaded into memory. Yes, ALL of it. Then it is resorted by the date field putting it back into sequential order. It is then written back to disk and the index file rebuilt as well. You don’t want to do this if you don’t need to as it takes a long time and uses a lot of system resources while it’s running.

Scan and Repair the Database:

This is the scan and repair that is run nightly if that checkbox is selected in the preferences window. The data files are opened and read through sequentially making sure that each record date is after the previous one. At each index node interval the index is verified to be correct to the offset in the file. If any records are found to be out of order in the data file then only that file is rebuilt rather than forcing a run on the entire database. If any errors are found the information is written to the XTension log file so that you can learn about it later if you wish.

Reap Old Database Records Now:

Every night a database “reap” is run after the nightly scan and repair. This menu item forces it to run right now. Every unit has a setting for how long you want to keep data for the unit. It defaults to forever so you might want to look into the necessity of that for units that update often. Generally having very large data files does not cause any problems and because of the speed of the binary search it doesn’t even really start to bog down searching through it until it becomes truly ludicrously large. How large that is I don’t know. I have many files that are approaching or already exceed a gig in my database and they search and show up in graphs at least as fast as any other unit.

If you have a setting other than “forever” for the individual unit then it is scanned during this process. If there are records at the beginning of the file that predate your cutoff then a temporary file is created to replace it, the data is read and each record that is earlier than you wanted to save is ignored and when data is reached that is within the time frame you wish to keep the data is transferred to the new file. When complete the old file is swapped with the temporary file and then deleted. The index file is rebuilt to represent the new offsets of the data and all disk caches and memory arrays are invalidated so they know to reload from the actual data the next time they are needed.

Database File Format:

There are 2 files for each unit storing data in the XTdb database. They are named “unit “ and then the unique ID of the unit as assigned in XTension. This is a unique numerical ID and not really human readable. If you need to find the files for a specific unit you can highlight it in the Database list window and then select “Reveal Unit Data File In The Finder” from the Database menu.

The data file has the suffix of “XTdbd” and the index file has the suffix file “XTdbi” The index file is less important and can always be rebuilt by XTdb if necessary for whatever reason. The layout of the data file is 16 bytes for each entry. The first 8 is a “double precision” number that contains the total seconds of the date of the event. The second 8 bytes contains a double precision number which is the value of the Unit from XTension when the value changed. XTension uses double precision values for all unit values so that they can contain fractions and not just whole integers. There are limitations to the double precision numerical format that you should be aware of by reading the Wikipedia Article on the subject.

The index file contains 8 bytes for each date record again in double precision format. A date is written to the index file for every 500 data entries in the data file. Making it much faster to get to within 500 records of the correct placement in the data file. This index interval may change at any moment however as I continue to optimize the system. At this moment all index arrays are loaded into memory at startup and maintained there as well as on disk for faster searching. This may change in the future if I need to switch to only caching the most often used indexes due to memory usage or something similar.

When performing a query XTdb finds the nearest start date in the data file by performing a Binary Search on the index file. The nearest date BEFORE the requested start date is found and that offset into the index is multiplied by the index interval (currently 500) in order to find the approximate placement in the data file. The indicated node of the data file is loaded, either from disk or from the disk cache if already loaded, and another Binary Search is performed on that section of the data to find the real, or closest without going over, start date within the file.

After finding the nearest start date the data is read sequentially until the dates equal or exceed the stop date for the query.

Appending New Datapoints:

When the value for a unit changes, or when an on/off event happens that needs to be stored in the database that information is sent to XTdb. In most cases the new activity date coming with the data is after the last data point that is already saved and so the new date and value can be written to the file as is. If the new value puts the record count over the next index point then the date is also appended to the index file and the in memory array of date offsets.

If the new value or event from a unit has an activity date associated with it that is before the last value already in the file then that data is considered to be in error and thrown out. It would be theoretically possible to find the correct place in the file for the new data and insert it, recreating the rest of the file and the indexes after doing so, but there seems no point to adding this ability since data should only ever come in that is increasing in time and not randomly forwards and backwards.

The exception to that is if an update is received that has the same date as the last data point. Since the dates use the total seconds value as the resolution of the database, it is limited to one value or event in any given second. This seems perfectly fine for almost any data that regular home automation software should need to deal with. It is possible for something to send repeats or for a sensor or other device to send more than one update in a second however rare this may be. If an update is received with an activity date in the same second as the last value saved the value is compared to the last value. If it is the same then the update is discarded as it wouldn’t change anything. If it is a different value then the data file is backed up by 8 bytes and the new value is written in place of the original one. This is an expensive operation CPU wise and should be avoided whenever possible.

In order to preserve the limited write cycles of modern SSD drives new data is only written out to the disk every few seconds maximum so that writes aren’t happening with every data point constantly and thereby using up the drive. This does mean that if the program crashes or the computer hangs or panics it is possible to lose the last few seconds of data. This seems a decent tradeoff for using up expensive SSD drives, some of which are internal now days and not replaceable. An option to not do this delayed disk writing is not currently available but is possible if anyone has a need for such a thing please let me know. Also let me know why you would want that as it might be a bigger problem that I could solve if the program is crashing regularly or something like that.

Once the database has been managed the event is sent on to any graph or gauge that includes that unit. Since Graphs and Gauges can contain many units the redrawing and distributing of new renders is not done inline with the data reception. Instead the new value is added to the cached query data for the graph and the graph is added to a redraw queue. This way if several units get updates closely to each other it will only result in the graph redrawing a single time with all the new data rather than redrawing for each unit that updates and sending multiple updates immediately after each other. As of this writing there are 3 rendering threads that look for any graphs in the queue, try to render them and send updates to the graph image, and then go to sleep for half a second before looking again. This way if there is a large graph that takes a long time to render other graphs can begin their rendering while it is still reading data or rendering the graphics for it.